INTRODUCING:

SIP PRESSURE DATA & MAPS

B3 Insight’s SIP (Subsurface Interval Pressure) formation pressure data and maps offers the knowledge and insights you need to enhance water management and water injection strategies in the Permian Basin, Haynesville and Bakken.

STAY INFORMED ON WATER REUSE TRENDS AND INSIGHTS

New Mexico Water Reuse Benchmark Q2 2023

The New Mexico Water Reuse Benchmark Report provides detailed tracking of produced water usage by top E&P companies in the Permian Basin.

Permian Basin Seismic Response Area (SRA) Impacts

Download a free, data-packed summary of the recent seismicity regulations in the Permian Basin- with the latest data from Texas RRC and the New Mexico OCD.

The most comprehensive and trusted data for intelligent water decisions

Environment & Conservation

- Understand land-water nexus

- Determine water consumption intensity

- Track and reduce environmental impact

Finance & Business Development

- Perform strategic market analysis

- Manage service portfolios

- Improve investment evaluations

Asset Inventory & Operations

- Analyze facility effectiveness

- Reduce lease operating expenses

- Optimize water management

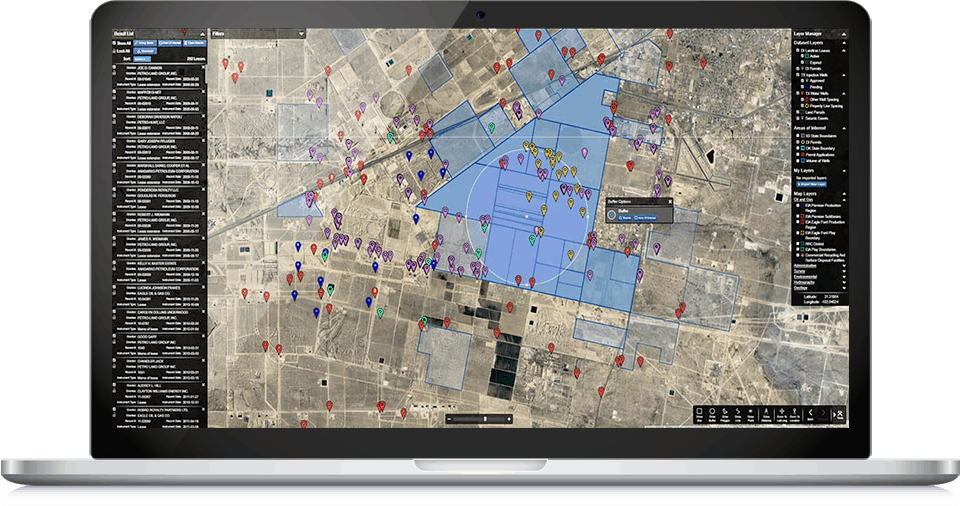

Oilfield Water Intelligence

Better Data. Better Insight. Better Outcomes.

Evaluate assets, enhance operational efficiencies, mitigate risk, allocate capital and benchmark performance while saving significant time, investment, and resources – all with one intuitive platform.

We develop technology and insight that enable you to make responsible and profitable decisions about water resources. B3’s flagship product is the leading water intelligence platform for upstream energy.

See what B3 Insight can do for you.

Water Risk is Business Risk.

Reduce water risk, find opportunities, and align with investor expectations.

Evaluate water-related company risk and opportunities with the only independent tool for market analysis, benchmarking, and peer-to-peer performance evaluation.

Learn more about why water risk in oil and gas matters and how to mitigate risk and identify opportunities.

Water Data Solutions

Make decisions quickly and with confidence

OilFieldH20 Platform

- Cloud-based platform for produced water and data analytics

- Unsurpassed visibility for the upstream and midstream energy industry

OilFieldH20 Insight

- Proprietary technical models for Macro and micro insights

- Permian Basin Water Study

- UIC Permit Application Monitor

- Consulting projects

Direct Insight Data

- Direct data imports

- Seamless access to B3 database servers

- Integration with business intelligence tools

Our Customers

Confidently using the most comprehensive and

trusted data to make intelligent water decisions

Mitigate risk, unlock opportunities

Insight & Articles

Sharing reports, case studies, and articles to help you understand water intelligence insight.

Data Collaboration is a Rising Tide that Lifts All Ships

Reading Time: 3 minutes Water management is evolving quickly, no matter what industry you consider. Whether it’s conservation, mining, utility supply, or oilfield water management, the need for data

The Growing Pressures of Produced Water Disposal

Reading Time: 8 minutes A version of this article appeared in the Journal of Petroleum Technology (JPT). Permian Basin Water Disposal 35 billion barrels. 1.5 trillion gallons. 1 Toledo

Agua es Vida

Reading Time: 3 minutes Agua es vida. Water is life. I recently re-watched a Western Landowners Alliance short film by this title (you can watch it HERE) and it